CrewAI: あなたのために一緒に働くAIエージェントのチーム

CrewAI: あなたのために一緒に働くAIエージェントのチーム

元ネタ:Clip source: CrewAI: A Team of AI Agents that Work Together for You | by Maya Akim | Medium

Andrey KarpathyのYouTubeのLLM紹介

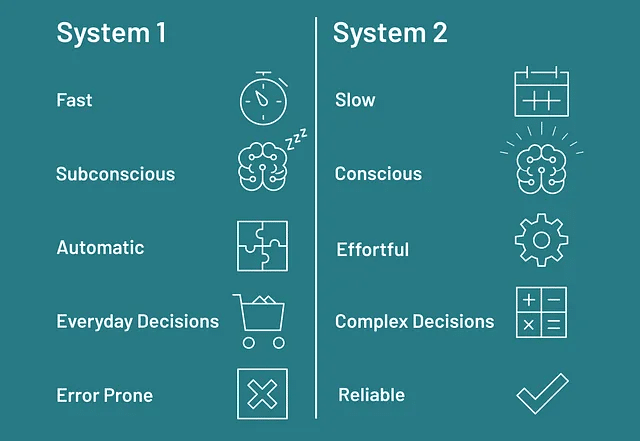

このとても参考になるLLMの紹介では、OpenAIのトップエンジニアの一人であるAndreyが、Daniel Kahnemanの「Thinking, Fast and Slow」の本を参照しています。Andreyが最もインスピレーションを受けたのは、この本の「人間の思考」のSystem 1と2の定義でした。

この本によると、すべての人間の思考は次の2つのカテゴリーのいずれかに分類されるとされています:

System 1 — 速く、しばしば無意識的に動作し、即座の判断や直感的で自動的な決定を行う。

System 2 — より遅く、情報を処理し、合理的で分析的な選択をするために時間と意識的な努力が必要となる。

Andrey氏は、システム1とLLMの「思考能力」との間に類似性を見出しています。彼は、LLMは本質的に次のトークンを予測することしかできないと指摘しており、これはGoogleのオートコンプリートの強化バージョンに似ています。たとえ彼らが時折非常に知的に見えることがあってもです。

しかし、LLMに30分間問題を考えさせ、さまざまな角度から「考える」こと、そして複雑な問題に合理的な解決策を提供するように求めたらどうでしょうか?システム2の能力を持つLLMを望むとしたらどうでしょうか?

LLMを「理性的に考えさせる」試み

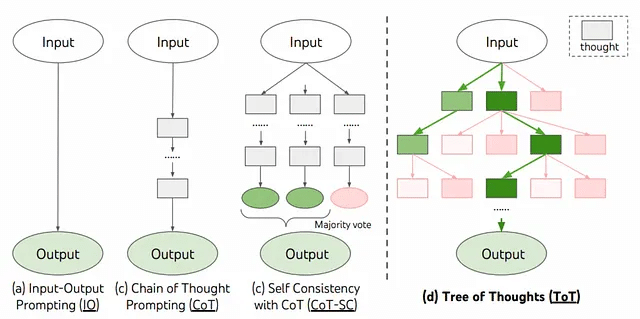

最近、LLMがより理にかなった「考え方」をするようにするためのさまざまな取り組みがなされています。

1つのアプローチは「Tree of Thought」プロンプティングテクニックです。この方法は、LLMに問題の解決策について議論する3人以上の専門家の間でディベートをシミュレートするよう促します。その出力は通常、標準のゼロショットプロンプトよりもはるかに優れています。

別のアプローチには、AIエージェントを利用する方法があります。AutoGPTのようなプラットフォームを使用すると、AIエージェントはさまざまな視点、目標、および目的を同時に考慮して問題を反復し、解決することができます。

CrewAI: 最高のエージェントチーム?

そのようなプラットフォームの1つがCrewAIで、複数のエージェントが協力してお互いに「話す」ことができるツールです。1つのエージェントの出力は、他のエージェントの入力になります。

では、ステップバイステップでその動作を見てみましょう。

まず、CrewAIをインストールするためにコマンドを実行したいです。

pip install crewai今、必要なクルーのタイプを定義する時間です。この例では、次のようなエージェントチームを作成しようと思います:

最新のAIプロジェクトやトレンドをインターネットで検索する

それについてブログ記事を書く

ブログのテキストが一部の基準を満たさない場合は、改善する

次のステップでは、すべての必要なモジュールをインポートしましょう:

import os

from crewai import Agent, Task, Process, Crew

# !pip install -U duckduckgo-search

from langchain.tools import DuckDuckGoSearchRunエージェントには、インターネットを閲覧し、情報を収集または処理し、ツールを介して他の外部システムとやり取りするための「スマート機能」が与えられています。

現時点では、多くの組み込みのLangchainツールの中から1つを選択することができます - そしてそれらはこちらで見つけることができます。または、独自のツールを定義することもできます。

この例では、エージェントのツールとして、インターネットを閲覧するためにDuckDuckGoを選択し、Langchainツールからインポートします。

OpenAIのAPIキーを設定し、検索ツールを定義することを忘れないでください。

os.environ["OPENAI_API_KEY"] = "api-key-here"

search_tool = DuckDuckGoSearchRun()別のLangChainツールを使用したい場合、たとえばGoogle検索のためのserper apiを使用したい場合、次のようにツールを定義します。

from langchain.agents import Tool

from langchain.utilities import GoogleSerperAPIWrapper

# Initialize SerpAPI tool with your API key

os.environ["OPENAI_API_KEY"] = "Your Key"

os.environ["SERPER_API_KEY"] = "Your Key"

search = GoogleSerperAPIWrapper()

# Create tool to be used by agent

search_tool = Tool(

name="Intermediate Answer",

func=search.run,

description="useful for when you need to ask with search",

)次のステップは、3つのエージェントを定義することです。インターネットを閲覧するエージェント、ブログ投稿を行うエージェント(少なくとも10段落)、そして私が定義した基準を満たしているかを確認する批評家エージェントが欲しいです。

explorer = Agent(

role='Senior Researcher',

goal='Find and explore the most exciting projects and companies in

AI and machine learning in 2024',

backstory="""You are and Expert strategist that knows how to spot

emerging trends and companies in AI, tech and machine learning.

You're great at finding interesting, exciting projects in Open

Source/Indie Hacker space. You look for exciting, rising

new github ai projects that get a lot of attention.

""",

verbose=True,

allow_delegation=False,

tools=[search_tool]

)

writer = Agent(

role='Senior Technical Writer',

goal='Write engaging and interesting blog post about latest AI projects

using simple, layman vocabulary',

backstory="""You are an Expert Writer on technical innovation, especially

in the field of AI and machine learning. You know how to write in

engaging, interesting but simple, straightforward and concise. You know

how to present complicated technical terms to general audience in a

fun way by using layman words.""",

verbose=True,

allow_delegation=True

)

critic = Agent(

role='Expert Writing Critic',

goal='Provide feedback and criticize blog post drafts. Make sure that the

tone and writing style is compelling, simple and concise',

backstory="""You are an Expert at providing feedback to the technical

writers. You can tell when a blog text isn't concise,

simple or engaging enough. You know how to provide helpful feedback that

can improve any text. You know how to make sure that text

stays technical and insightful by using layman terms.

""",

verbose=True,

allow_delegation=True

)各エージェントが実行する3つのタスクを定義しましょう。

task_report = Task(

description="""Use and summarize scraped data from subreddit LocalLLama to

make a detailed report on the latest rising projects in AI. Your final

answer MUST be a full analysis report, text only, ignore any code or

anything that isn't text. The report has to have bullet points and with

5-10 exciting new AI projects and tools. Write names of every tool and

project. Each bullet point MUST contain 3 sentences that refer to one

specific ai company, product, model or anything you found on subreddit

LocalLLama. Use ONLY scraped data from LocalLLama to generate the report.

""",

agent=explorer,

)

task_blog = Task(

description="""Write a blog article with text only and with a short but

impactful headline and at least 10 paragraphs. Blog should summarize

the report on latest ai tools found on localLLama subreddit. Style and

tone should be compelling and concise, fun, technical but also use

layman words for the general public. Name specific new, exciting projects,

apps and companies in AI world. Don't write "**Paragraph [number of the

paragraph]:**", instead start the new paragraph in a new line. Write names

of projects and tools in BOLD.

ALWAYS include links to projects/tools/research papers.

""",

agent=writer,

)

task_critique = Task(

description="""Identify parts of the blog that aren't written concise

enough and rewrite and change them. Make sure that the blog has engaging

headline with 30 characters max, and that there are at least 10 paragraphs.

Blog needs to be rewritten in such a way that it contains specific

names of models/companies/projects but also explanation of WHY a reader

should be interested to research more. Always include links to each paper/

project/company

""",

agent=critic,

)チームを実体化しましょう。

# instantiate crew of agents

crew = Crew(

agents=[explorer, writer, critic],

tasks=[task_report, task_blog, task_critique],

verbose=2,

process=Process.sequential # Sequential process will have tasks executed one after the other and the outcome of the previous one is passed as extra content into this next.

)最後に、クルーを実行して、結果を端末に出力しましょう。

# Get your crew to work!

result = crew.kickoff()

print("######################")

print(result)結果

こちらは私のクルーが作成したブログ投稿です。悪くないですが、このブログを書いている時点で、Mixtral、Robin AI、Perplexityが実際に注目されていますが、私のブログの残りは一般的な内容に聞こえます("生成"や"多様なモード"のAIの段落)。

**Blog Article: "AI Stars: Future of Machines"**

1. **Multimodal AI: Jack of All Trades**: The latest addition to the AI

family in 2024, Multimodal AI is not just about data processing. It easily

handles various types of input, just like our brain juggles different senses.

2. **Mixtral Model: Language Pro by Mistral AI**: Giving a tough fight to

OpenAI's GPT-3.5 is the AI language model, Mixtral 8x7B. It does an amazing

job of predicting the next sentence in your story. Plus, it's open source

and performs superbly in tests.

3. **OpenAI's GPT-3.5 Series: DIY AI**: This AI series is a Lego set for

developers, letting them tweak models for their needs. The latest, GPT-3.5

Turbo, is now open for customization, with the more advanced GPT-4 set to

join soon.

4. **Generative AI: The Creative Whizz**: A trend that began in 2023,

Generative AI is still a head-turner. It's the AI equivalent of Picasso,

creating everything from art to text, sparking interest across various

sectors.

5. **Robin AI: The Legal Helper**: Robin AI has introduced an AI copilot

for creating and reviewing legal contracts. It's like having a personal

lawyer, always ready to assist. Recently, it secured a massive $26 million

in funding, showcasing its popularity.

6. **Perplexity: The AI Search Engine Giant**: This startup has caused a

stir in the AI world, raising $73.6 million in a series-B funding round.

With total funding of around $100 million, it's the AI version of Elon Musk,

valued at approximately $520 million.

The AI and machine learning scene is alive with these rising stars. Robin AI

and Perplexity are creating ripples, while Multimodal AI and Generative AI

are here to stay. With customizable models like OpenAI's GPT-3.5 series, the

future looks bright. So, hold on tight as we delve into the exciting

developments that AI and machine learning bring.結果がどれだけランダムであるかを考えておくべきことの一つは、同じスクリプトを2回実行したときの出力です。

Headline: "Future of AI: 2024's Top Discoveries"

Hello, AI fans! Today, we're traveling into the future, where smart

machines and human-like AI reign. From multimodal AI to generative AI

and user-friendly models, 2024 is quite a ride. Let's go!

First, let's talk about multimodal AI. Think of AI models that process

not just text, but also images and sounds. Companies like Mistral AI are

at the forefront, creating models as powerful as GPT-4 that work on your

local machine. Exciting, right?

Generative AI is another hot topic in 2024. Thanks to OpenAI's GPT-3.5 series,

we're seeing AI that creates new content, from articles to music. It's like

having your personal Mozart!

User-friendly is the new trend. Companies like GitHub focus on AI projects

that simplify user's life. These projects are not just smart – they're

practical. That's a double win!

In 2024, AI startups are buzzing. Companies like Groq and Samba Nova are

developing cutting-edge products. There's also D-Matrix, NeuReality,

Untether.AI, Tenstorrent, and BrainChip – all adding unique value.

Articul8 is another standout. This GenAI software platform enables large

businesses to scale AI operations. Remember this name – it's the next big

thing!

Old AI projects are still relevant. Systems like Product Recommendation,

Plagiarism Analyzer, Bird Species Prediction, Dog and Cat Classification, and

Next Word Prediction are still in use. Old is gold!

PrivateGPT by imartinez is a standout on Github. It offers document-related

questions without the need for an internet connection. Plus, it's 100%

private – no data leaks.

Triton iii is a bit mysterious, but it's connected to Triton Robotics.

We're keeping an eye on this one!

BabyAGI is impressive. This autonomous agent system uses large language

models (LLMs) to carry out tasks. It's like an army of agents working

together. Plus, it's open-source!

Lastly, we have Auto-GPT. This experimental app shows what GPT-4 can do –

and it's amazing. It's one of the first examples of GPT-4 working fully

autonomously, pushing AI boundaries.

So, that's a glimpse into the future of AI and machine learning. It's a

world full of innovation, creativity, and intelligence. And we're just at

the beginning. Stay tuned for more exciting AI updates!ご覧の通り、2番目の出力は完全に異なるスタイル、トーンを持ち、異なるニュースを特集しています。残念ながら、唯一の一貫性は私が本当に嫌いな部分です - 一般的な「生成的」および「マルチモーダル」AI段落です。

カスタムツールを作成する

すべてのニュースレターやブログは、そこに入る情報によってのみ優れています。そして私のブログの情報は…まあ、あまり魅力的ではありません。PrivateGPTとBabyAGIは古い情報であり、さらに、いくつかの段落は単なる一般的な言葉の羅列です。それを修正しましょう。

まず、これは私がブラウザツールとしてDuckDuckGoを使用しているために起こっていることです。私の意見では、最もエキサイティングなAIニュースはsubreddit “LocalLLama”で見つけることができます。ですので、そのsubredditをスクレイピングし、トップ10の「ホット」な投稿とそれぞれの投稿についての5つのコメントに基づいてブログポストを作成するカスタムツールを追加しましょう。

# pip install praw

from langchain.tools import tool

class BrowserTool():

@tool("Scrape reddit content")

def scrape_reddit(max_comments_per_post=5):

"""Useful to scrape a reddit content"""

reddit = praw.Reddit(

client_id="your-client-id",

client_secret="your-client-secret",

user_agent="your-user-agent",

)

subreddit = reddit.subreddit("LocalLLaMA")

scraped_data = []

for post in subreddit.hot(limit=10):

post_data = {"title": post.title, "url": post.url, "comments": []}

try:

post.comments.replace_more(limit=0) # Load top-level comments only

comments = post.comments.list()

if max_comments_per_post is not None:

comments = comments[:5]

for comment in comments:

post_data["comments"].append(comment.body)

scraped_data.append(post_data)

except praw.exceptions.APIException as e:

print(f"API Exception: {e}")

time.sleep(60) # Sleep for 1 minute before retrying

return scraped_dataredditのAPI資格情報はこちらで取得できます。

そして最後に、この新しいカスタムブラウザツールを割り当てることで、エージェントを更新しましょう。

explorer = Agent(

role="Senior Researcher",

goal="Find and explore the most exciting projects and companies in AI and machine learning in 2024",

backstory="""You are and Expert strategist that knows how to spot emerging trends and companies in AI, tech and machine learning.

You're great at finding interesting, exciting projects in Open Source/Indie Hacker space. You look for exciting, rising

new github ai projects that get a lot of attention.

""",

verbose=True,

allow_delegation=False,

tools=[BrowserTool().scrape_reddit],

)準備が整いましたので、結果を見てみましょう。

"Exciting AI Trends: The New Stars of Machine Learning!"

1. **Friendly AI Chats:** Forget boring chatbots that repeat themselves.

Say hello to our new AI model. It uses three different models and changes

between them. Now, chatbots sound more fun and less repetitive. Some people

wonder how it works, but we love how it makes chats better!

[Check it out](https://huggingface.co/papers/2401.02994).

2. **Computer Workouts:** Adding more GPUs (like computer muscles) to your

system can make it better and faster. But we're still figuring out by how

much.

3. **WikiChat:** Stanford University's project, WikiChat, acts as a

fact-checker for AI chatbots. It uses Wikipedia to make sure the chatbots

are correct. Imagine a chatbot that's right 97.9% of the time!

[Get the facts](https://www.reddit.com/r/LocalLLaMA/comments/1920hho/wikichat_stopping_the_hallucination_of_large/).

4. **MoE-Mamba:** This project mixes different models to make a super

AI model. It's like baking a cake with the best ingredients. The result? A

better model that learns faster.

[Check the recipe](https://arxiv.org/abs/2401.04081).

5. **Mixtral 8x7B:** This project is known as the first open-source model

to perform the best. It can grow with your needs. That's Mixtral for you!

[Learn more](https://arxiv.org/abs/2401.04088).

6. **Memory Boost AI:** This project improves AI memory. It boosts the

context length of Llama-2-7B by 100 times! It remembers more and performs

better in tasks. [See this marvel](https://arxiv.org/abs/2401.03462).この出力は、DuckDuckGoでインターネットをスクレイピングして得たものよりもずっと良いです。実際、この出力は非常に役立つと思います。私は最もエキサイティングなAIのトピックの要約版を手に入れることができ、さらに深く掘り下げたい場合は、エージェントが提供したリンクをクリックすることができます。

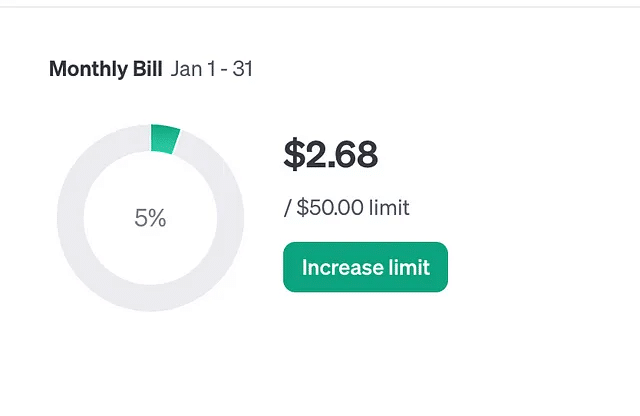

コストについて

私は合計5回スクリプトを実行し、gpt4 APIを使用してニュースレターを生成しました。gpt4がデフォルトですが、gpt3.5のような他のモデルを指定することもできます。

これらの実験には3ドル未満の支払いをしました。ですので、コストはかなり速く積み上がっていくことがわかります。

Ollamaを介してローカルでモデルを実行すれば、これを回避する方法があります。

Ollamaを使用してローカルでモデルを実行する

正直に言うと、私はローカルモデルをうまく動作させたことがありません。私のラップトップは16GBのRAMを搭載しており、LLM(Large Language Models)を7Bパラメータで実行することしかできません。場合によっては13Bモデルも実行できます。

私はMistral、Llama 2、Nous Hermes、Starlingを試しましたが、その中でMistralが最もまともな結果を出しました。つまり、Mistralで実際の出力を得ることができました。一方、Llama 2とStarlingはまったく出力を得ることができませんでした。Nous Hermesはブログを書くことはできましたが、情報の質は非常に残念なものでした。

以下がMistralでの最善の試みです。

"AI Stars: Uncovering the Fascinating Newcomers in Tech!"

Paragraph 1: Hey there, tech-savvy folks! Ever wonder what's brewing in the

AI scene? Buckle up as we delve into the latest and greatest AI projects that

are making waves in both research labs and tech companies!

Paragraph 2: First stop: "DeepMind Dreamer" by Google's DeepMind.

This groundbreaking project uses AI to create art inspired by human dreams,

bridging the gap between humans and machines! ️

Paragraph 3: Next, let's take a look at "Hugging Face's Transformers."

This transformative tool uses machine learning to understand context in

natural language, paving the way for more human-like AI!

Paragraph 4: In the world of startups, "Tanjo" is blooming. This

innovative company is using AI to revolutionize mental health diagnosis

and treatment, making therapy more accessible and affordable for all!

Paragraph 5: Another rising star is "EleutherAI," developing open-source

AI models, allowing researchers worldwide to collaborate on the latest

advancements in AI technology!

Paragraph 6: "Midjourney.ai" is also making waves, offering a unique

platform that uses machine learning to generate code for you! Say goodbye

to tedious coding and hello to more productivity! ⚫️

Paragraph 7: Now let's talk about "GitHub's Most-Watched AI Repositories."

From image recognition and natural language processing to autonomous vehicles

and robotics, these projects showcase the limitless possibilities of AI!

Paragraph 8: Companies like Microsoft, IBM, and Tesla continue to push the

boundaries in AI research and development. Their recent advancements are truly

mind-blowing! Stay tuned for more updates from these giants!

Paragraph 9: As we wrap up, let's not forget about the future of AI

education! Platforms like Coursera and edX offer courses to help you expand

your knowledge and become an AI expert!

Paragraph 10: Lastly, we can't ignore the impact of AI on our daily lives.

From smartphones to smart homes, AI is transforming how we connect,

communicate, and even cook! Embrace the future with open arms! ❤️

So there you have it! These projects and companies are just the tip of the

iceberg in the exciting world of AI and machine learning. Stay curious, stay

informed, and keep exploring the endless possibilities of technology! このテキストには、ミストラルのエージェントが実際に課題を「理解」していたことを私に安心させるものは何もありません。出力は、トレーニングデータから抽出された一般的なテキストと絵文字の群れのように見えます。

Nous Hermesも同様の出力を持っており、基本的には一般的なテキストの群れで、実際にスクレイピングされたプロジェクトやツールについての言及はありませんでした。

Latest AI Tools Change Industries with Chatbots & Image Recognition

Dear readers, it's time to explore the thrilling world of advanced artificial

intelligence tools! The latest AI projects on LocalLLama subreddit are making

big waves in various industries. From healthcare to finance and marketing,

these revolutionary technologies are changing how we work and live.

Let's start with chatbots - those helpful virtual assistants that have become

so popular over the years. The newest AI-powered chatbots can now talk like

humans, giving 24/7 customer service and even helping with health issues.

In healthcare, they make it easier for everyone to get help.

Another fascinating area is image recognition software. These tools analyze

images to find patterns, objects, and even feelings. They're a game-changer

in finance by stopping fraud and helping prevent financial crimes.

In marketing, AI-powered image recognition gives companies a better

understanding of what customers want.

Predictive analytics models are also making a big difference. They use

machine learning algorithms to look at past data and guess what will happen

next. Businesses love them because they help understand customer needs and

predict market shifts. Healthcare benefits too, with AI-powered models helping

doctors diagnose diseases faster and better.

Here are some trendy AI tools from LocalLLama subreddit:

1. GPT-3: An AI model made by OpenAI that understands and writes like a human. It's useful for writing, translation, and more!

2. Stable Diffusion: A powerful tool that uses diffusion models to make realistic images from text descriptions. It's great news for the art world.

3. CLIP: Also developed by OpenAI, CLIP is an AI model that works with both images and words. It's handy for stuff like content control and creating captions.

In summary, the newest AI tools on LocalLLama subreddit are truly amazing.

They're changing industries, making life easier, and opening new doors.

As a writer who loves this field, I find these developments exciting and

inspiring. I hope you do too!とにかく、もし試して実験したいと思うなら、コードを共有しますので、どんな経験をしたか教えてください(特に7Bより大きなモデルを実行できる場合は特に)。

from langchain.llms import Ollama

mistral = Ollama(model="mistral")

# assign mistral as llm to every agent

explorer = Agent(

role="Senior Researcher",

goal="Find and explore the most exciting projects and companies in AI and machine learning on LocalLLama subreddit in 2024",

backstory="""You are and Expert strategist that knows how to spot emerging trends and companies in AI, tech and machine learning.

You're great at finding interesting, exciting projects on LocalLLama subreddit. You turned scraped data into detailed reports with names

of most exciting projects an companies in the ai/ml world.

""",

verbose=True,

allow_delegation=True,

tools=[BrowserTool().scrape_reddit] + human_tools,

llm=mistral,

)

writer = Agent(

role="Senior Technical Writer",

goal="Write engaging and interesting blog post about latest AI projects using simple, layman vocabulary",

backstory="""You are an Expert Writer on technical innovation, especially in the field of AI and machine learning. You know how to write in

engaging, interesting but simple, straightforward and concise. You know how to present complicated technical terms to general audience in a

fun way by using layman words.""",

verbose=True,

allow_delegation=True,

llm=mistral,

)

critic = Agent(

role="Expert Writing Critic",

goal="Provide feedback and criticize blog post drafts. Make sure that the tone and writing style is compelling, simple and concise",

backstory="""You are an Expert at providing feedback to the technical writers. You can tell when a blog text isn't concise,

simple or engaging enough. You know how to provide helpful feedback that can improve any text. You know how to make sure that text

stays technical and insightful by using layman terms.

""",

verbose=True,

allow_delegation=True,

llm=mistral,

)2024年11月1日の更新: 私はいくつかの13Bモデルを実行することに成功しました: Llama 2 13B、ベース、チャット、およびテキストモデル。私の仮定は、より大きなモデル=より良い結果だということでした。しかし、実際はそうではなく、すべてのモデルは期待を下回り、ミストラルやヌース・エルメスよりも悪い結果を出しました。

しかしながら、私を驚かせたことが1つありました。Llama 13Bベースモデルは実際にサブレディットから実際の スクレイピングされたデータを生成することに成功しました。微調整されていないモデルなので、結果は適切なブログやニュースレターのようには見えず、むしろ深夜3時の私のランダムな思考のように見えました。以下はその出力の例です:

Llama 13B output:

Based on the given text, here are some possible thoughts that could be going

through the mind of someone reading this conversation:

1. Wow, these conversations are so detailed and technical! I'm not sure I

understand everything being discussed.

11. The differences between EXL2 and GGUF seem significant. What are the key

distinctions?

1111. MegaDolphin- 120b seems impressive! I wonder how it compares to other

models.

11111. Why is there a discrepancy between EXL2 and GGUF in terms of size and

Performance?

111111. The conversation about measuring and quantizing Models for Local

LLaMA is Fascinating. I've been doing some research on Quantization

techniques

Recently.

1111111. It seems like the community here is very active and helpful.

I might join in on the conversations about Local LLaMA.

11111111. The mention of "chatml instruct template" caught my attention.

I wonder what that refers to Maybe something useful for me!この記事が気に入ったらサポートをしてみませんか?